Responsible AI, The Lumiera Way

The Lumiera Foundation™ for Responsible AI, and a comprehensive look at why RAI matters across various aspects of AI implementation.

Sep 19, 2024

·

5

min read

Intro

In previous issues of the Loop, we explored global perspectives on responsible AI (RAI), including the GIRAI's findings and trends from Stanford's AI Index Report. Today, we're introducing the Lumiera Foundation™ for RAI, our comprehensive approach that advances industry understanding of responsible AI. Read on to learn how this framework can help organisations take action.

In this week's newsletter

What we’re talking about: The Lumiera Foundation for Responsible AI and a comprehensive look at why RAI matters across various aspects of AI implementation.

How it’s relevant: As AI adoption accelerates, organisations need a clear framework to guide responsible development and deployment, balancing innovation with ethical considerations.

Why it matters: Implementing responsible AI practices can lead to innovation, improved stakeholder trust, reduced legal and reputational risks, and more sustainable long-term AI adoption.

Big tech news of the week

🍓 OpenAI releases o1, its first model with ‘reasoning’ abilities. The company believes the model, previously code-named “strawberry,” represents a brand-new class of capabilities.

👓 Mistral AI, a French startup, has entered the multimodal AI arena with the release of Pixtral 12B, a model capable of processing both text and images. The AI firm, known for its open-source large language models (LLMs), has also made the latest AI model available on GitHub and Hugging Face for users to download and test.

🧬 Google DeepMind has unveiled an AI system called AlphaProteo that can design novel proteins that successfully bind to target molecules, potentially revolutionising drug design and disease research.

The Lumiera Foundation™ for Responsible AI

Definitions and approaches to responsible AI (RAI) vary across the industry, typically reflecting the principles, business position and interests of the organisation providing the definition. At Lumiera, we consider more than a single set of pillars to guide organisations in responsible implementation.

We’ve extracted a set of foundational themes that span the leading definitions for RAI:

Comprehensive approach covering design, development, and deployment

Key principles: Fairness, transparency, trust, privacy, security, safety

Alignment with human rights, stakeholder values, and legal standards

Ethical considerations and accountability

Consideration of broader societal impacts

All of these need to be taken into account when building your own set of guiding principles. Moving fast is exciting, but thinking things through leads to lasting success. Doing so will ensure a more effective AI strategy and ultimately promote adoption.

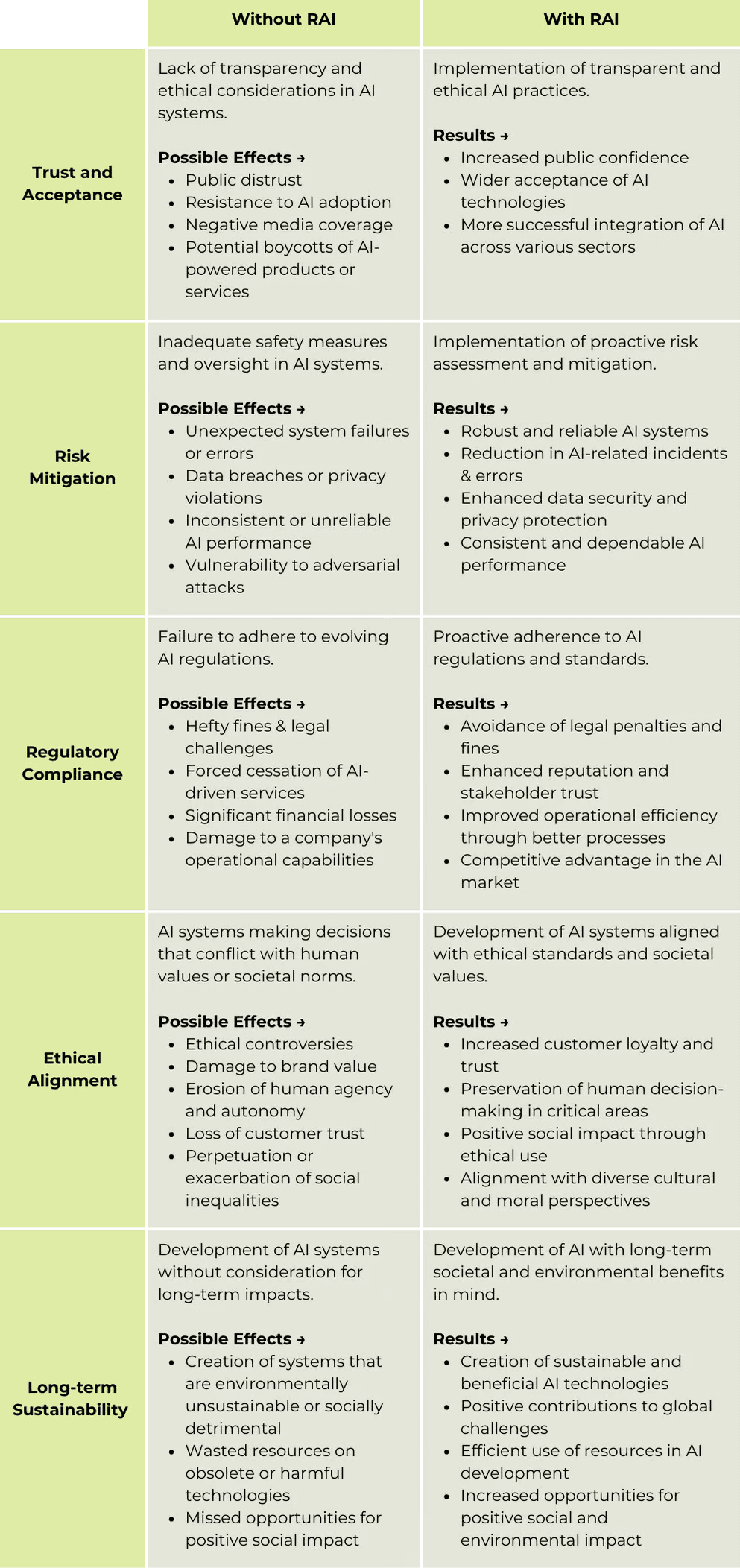

Why Responsible AI Matters

Take action! From Principles to Practice 🔆

To integrate our Responsible AI Foundation, the Lumiera approach focuses on:

Customised Implementation: Tailoring RAI practices to each organisation's unique context and needs.

Cross-functional Collaboration: Bringing together diverse expertise to address the multifaceted challenges of RAI, leading to increased perspective density.

Practical Tools and Metrics: Developing concrete, measurable indicators of RAI performance.

Cultural Integration: Embedding RAI principles into the organisational culture, not just technical processes.

Stakeholder Engagement: Facilitating meaningful dialogue with all affected parties to ensure various perspectives are considered.

Do you want to know how to future-proof your AI projects through the Lumiera framework? Send us an e-mail at hello@lumiera.ai and we will get things rolling!

Get free weekly insights straight to your inbox

No spam, unsubscribe at any time